An extended essay attempting to synthesize the current zeitgeist around universities, our research ecosystem, and technological stagnation; to argue that at least part of the solution is to unbundle the societal roles the university has taken on; and to suggest some concrete actions.

Universities are a tricky thing. Almost everybody has at least one touchpoint with them: attending as an undergraduate, masters, PhD, or professional student; working at or with them; knowing someone who did one of those things; seeing or hearing “expert opinions” in media coming from professors; or perhaps seeing them as another world that many people pour time and resources into. Technology is similar in its many varied touchpoints with our lives (I’ll get to the connection between the two in just a moment).

Across a broad swath of domains and political positions, there’s agreement that:

-

Universities are important.

-

There is something amiss with universities.

- Reform of some sort is needed for this important institution.

But there is strong disagreement about:

-

Why

universities are important

-

What

is amiss with them

- How things need to change

It’s a

blind-men-and-an-elephant

situation.

Each of us is grabbing the part of a massive system that

is closest to our lives and priorities. Some people see

universities

as doing a poor job giving students skills for successful careers;

others see

them

abnegating their duty to provide moral instruction to future

leaders;

others

see

universities failing in their role of discovering true things about the

universe playing out in the replication crisis and other scandals;

from

institutional

politicization

to

insufficient

political action on important issues,

the list goes on.

The part of the beast that I grapple with daily is the university’s role in “pre-commercial technology research” – work to create useful new technologies that do not (yet) have a clear business case. An abundant future, new frontiers, and arguably civilization itself all depend on a flourishing ecosystem for this kind of work. But in the years since we started Speculative Technologies to bolster that ecosystem and unlock those technologies, we have experienced first-hand a sobering truth: universities have developed a near-monopoly on many types of research. And like many monopolies, they are not particularly good at all of them.

It’s impossible to talk about any specific university issue without stepping into a much deeper conversation about the institution as a whole and how we got here. During the 20th and early 21st century, universities developed a monopoly on so many societal roles. These coupled monopolies mean that you cannot fix any specific problem without touching many others at the same time. Those changes require understanding the roles that have been bundled into a single institution, their particular pathologies, and how they play tug-of-war with the university’s incentives.

There is no single solution here. Silver bullets don’t kill wicked problems! But there is a meta-solution: unbundling the university .

For those not up on their Silicon Valley Jargon: you can think of universities as a massive bundle of societal roles — from credentialing agency to think tank to discoverer of the laws of the universe to generator of new technology. Unbundling means creating new institutions that are specialized to excel at small subsets of those roles.

However, the solution is not to raze the system to the ground: destroying institutions outright almost never works. Considering the historical longevity of universities and the Lindy effect, the odds are that Harvard will outlast the United States. Unbundling is arguably good for the universities themselves, enabling them to focus on the things they are best at. (Of course, reasonable people disagree about what those things are!)

To be explicit, the point of this piece is severalfold:

-

To try to synthesize the different viewpoints on the

university.

-

To argue that the university story is intimately coupled to the

technology stagnation story.

-

To create a big tent among the different groups of people who care

about the different aspects of #1 and #2.

- Finally, to suggest a path forward.

We’ll follow a path that looks a bit like the “ machete order ” of Star Wars:

-

Part 0

is an executive summary —

laying out the core thesis for busy people.

-

Part

1

is about pre-commercial technology research: what it is, why it’s

important, the ways that academia has created a stranglehold on its

creation, and why that’s bad.

-

Part

2

jumps back in time to see the backstory of the university, how it

ended up in this monopoly position, and how it’s taken on so many

important societal roles.

- Part 3 explores possibilities for the future.

0. Executive Summary

21st century universities have become a massive “bundle” of societal roles and missions — from skills training to technology development to discovering the secrets of the universe. For the sake of many of these societal roles and arguably for the sake of the universities themselves, Universities need to be unbundled and in particular , we need to unbundle pre-commercial technology research from academia and universities.

Universities have been accumulating roles for hundreds of years but the process drastically accelerated during the 20th century. (For an extended version of this story, see section 2. ) A non-exclusive list of these missions might include:

-

Moral instruction for young people

-

General skills training

-

Vocational training for undergraduates

-

Expert researcher training

-

A repository of human knowledge

-

A place for intellectual mavericks/ a republic of scholars

-

Discovering the secrets of the universe

-

Inventing the technology that drives the economy

-

Improving and studying technology that already exists

-

Credentialing agency

-

Policy think tank

-

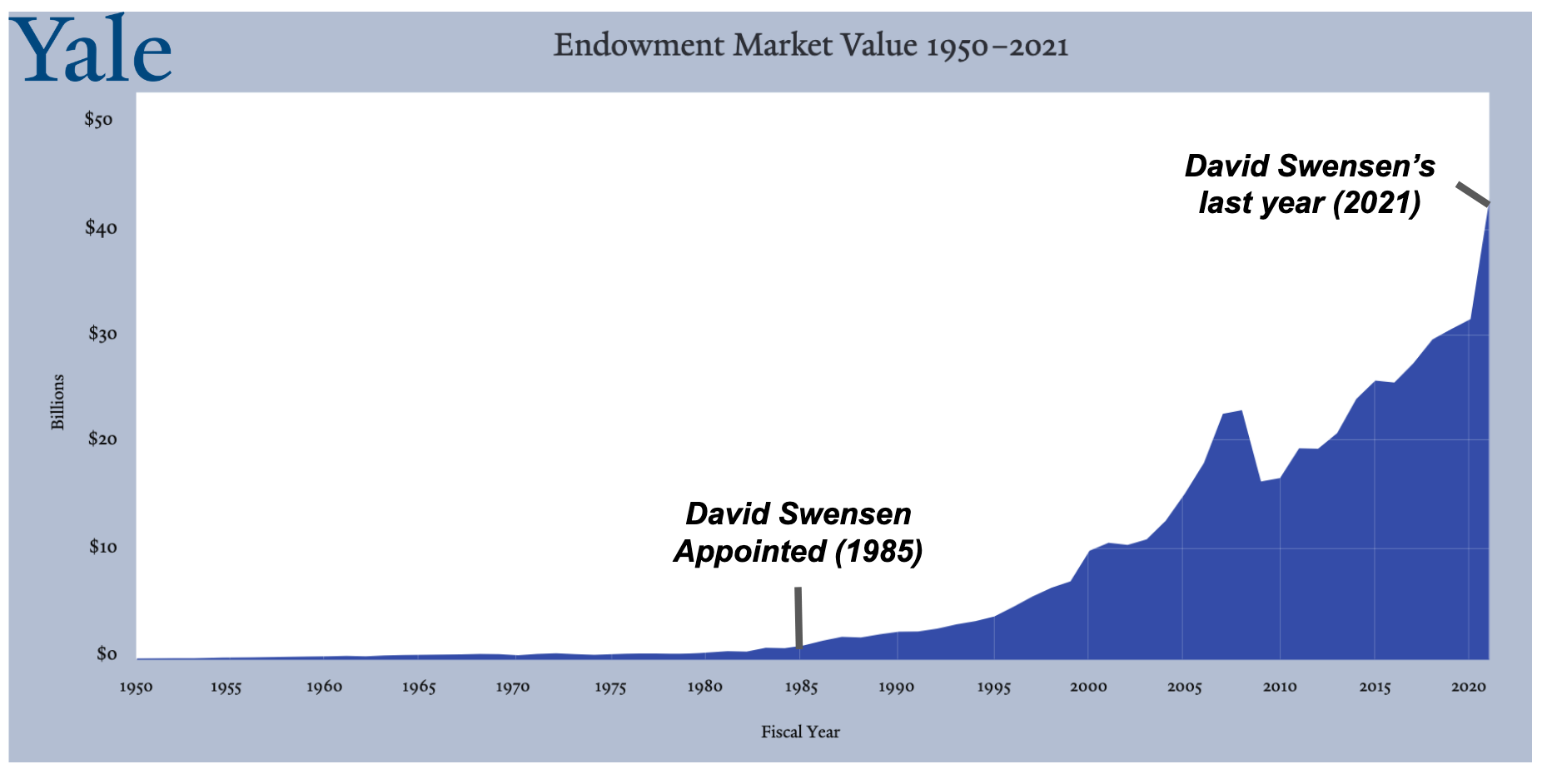

Hedge fund

-

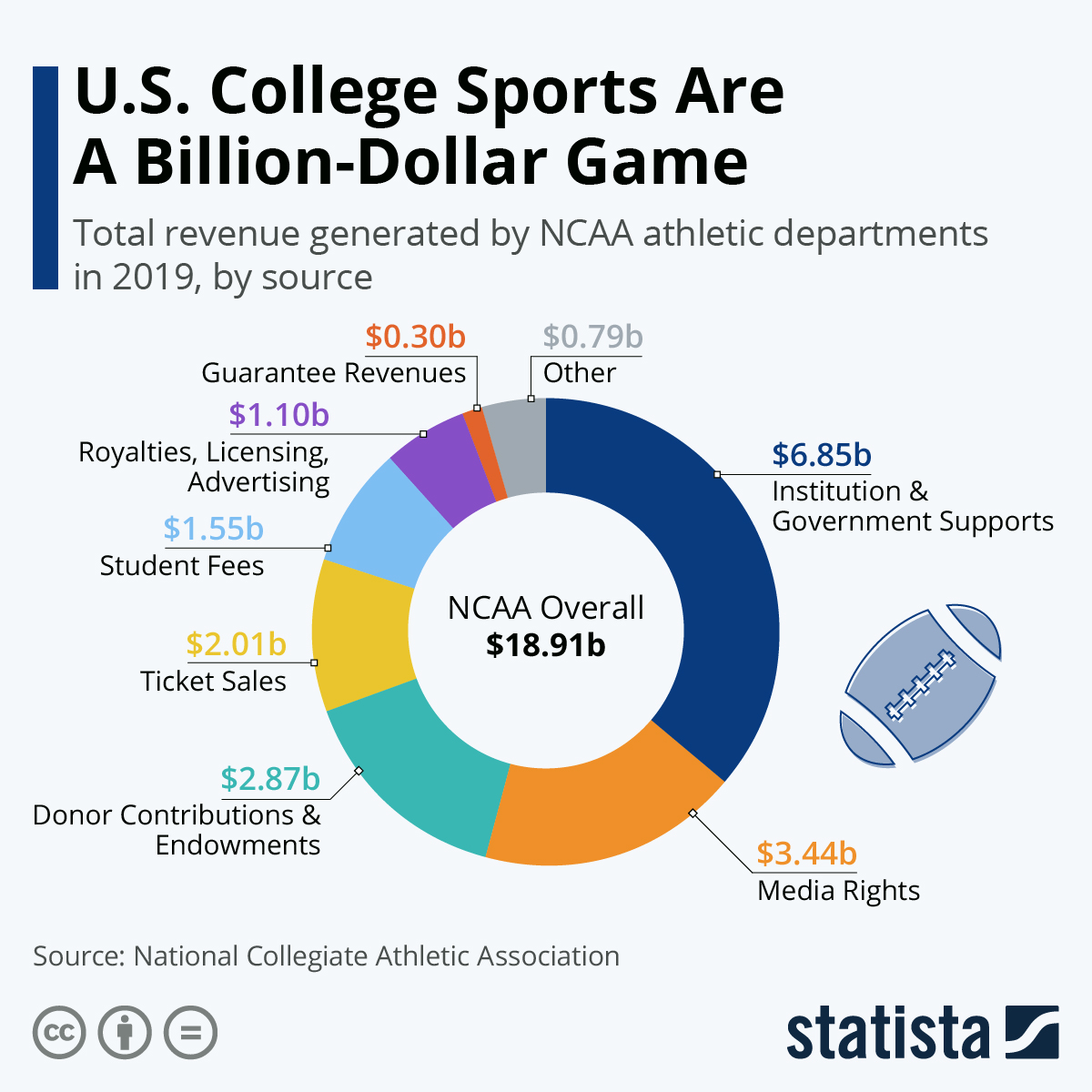

Sports league

-

Dating site

-

Lobbying firm

- Young adult day care

These different missions all come with money, status, and vested stakeholders. Money, status, and stakeholders in turn have created a self-perpetuating bureaucratic mess that many people are unhappy with for drastically different reasons. One point of agreement, regardless of which roles you care most about: Universities are no longer balancing these missions well.

“University issues” evokes many different things in different people’s minds. Consider these stylized but true anecdotes:

Alice went to grad school to advance clean energy technology. She spent five years doing work that was published in top journals but would never actually scale. The technology never left the lab.

Bob enrolled to become a better thinker and engage with great ideas. He found adjuncts racing between campuses teaching standardized intro courses, while tenured professors focused on publishing papers nobody reads.

Carol expected college to open doors to a career. She’s now serving coffee while working on her second masters because she was told “your degree doesn’t matter, just explore!”

Dave’s breakthrough battery chemistry is stuck in tech transfer limbo. After 18 months of negotiations, the university still wants 30% equity and exclusive licensing rights. No investor will touch it.

Eve spent her entire life striving to become a professor because she loved doing math and building experiments. She now spends 80% of her time writing grants, managing bureaucracy, and navigating university politics instead of thinking about physics.

Frank joined a university to start an unconventional new research center after years working in the federal government. Clearly no stranger to slow-moving bureaucratic organizations, he rage-quit after six months because he couldn’t get anything done.

Grace joined a prestigious lab hoping to uncover the secrets of human nature. Instead, she spent three years optimizing click-bait paper titles and p-hacking results to maintain grant funding.

This situation grew organically through a series of steps that each

made sense at the time: from “Universities are where the literate smart

people are, so let’s have them educate government administrators instead

of just priests” to “the smart people at universities are pretty good at

research and it’s a war, so let’s ask them to expand their scope” to

“More and more people are going to college, so let’s use graduating from

college as a requirement for the large majority of jobs.” (For the full

story,

see

section 2.

) But like the peacock evolving to have such a heavy tail

that it can’t escape predators or

the Habsburg

Jaw,

processes where each step makes sense can still lead to

outcomes with a lot of issues.

There are reasons to avoid marrying cousins even if it keeps power in the family…

The university bundle is like expecting every coffee shop to also include a laundromat, a bookstore, and a karaoke bar. There’s nothing wrong with a laundromat-coffeeshop-bookstore-karaoke bar, and in fact, that may even be exactly what some people want. The problem is that when every coffee shop is also a laundromat, bookstore, and karaoke bar, it’s hard to imagine them making the best coffee they possibly could. Furthermore, there’s work that someone might want to get done in a quiet coffee shop that’s hard to do over blasting karaoke music and bad singing; water from leaking washing machines might damage the books, the people waiting for their laundry might ruin the vibe for karaoke singers; and a host of other problems, big and small.

The solution isn’t to shut down all the laundromat-coffee shop-bookstore-karaoke bars or make a law that nobody can serve coffee in the same building as a laundry machine. Nor is the solution the common sentiment: “ah, if only we created a new laundromat-coffee shop-bookstore-karaoke bar that prioritized the function I care about.” Instead, we need to encourage people to start places that just serve coffee or just do laundry (or just sell books and make coffee but not laundry).

For the sake of education, science, and culture, we need a diverse ecosystem of institutions. This ecosystem probably includes universities, but prevents any specific monoculture.

The right mix is probably impossible to know a priori . The unpredictability of the new ecosystem is a feature, not a bug; underspecification leaves room for people to try all sorts of experiments. Just for discovering the secrets of the universe you can imagine all sorts of institutions: one rewards the wackiest ideas, one prioritizes just trying stuff really fast, and one is set up to do work that only has external milestones every 100 or more years. Now imagine that for roles from credentialing to education to skills training.

It’s fine and good to say “unbundle the university” but what does that mean concretely?

There is a whole laundry list of things that will enable other institutions to spring up that you can do in many different institutions: from private companies to foundations to governments to your capacity as an agentic individual. Here are some, but nowhere near all of them:

Things organizations (governments, foundations, etc) can do:

-

Stop requiring university affiliations for grants.

-

Reduce cycle times for funding research.

-

Experiment

with how universities are run.

- If you have access to spare physical resources like lab spaces or machine shops, make it possible for unaffiliated people to use them.

Things individuals can do:

-

Judge people on portfolios, not degrees.

-

Give people a hard time for getting unnecessary degrees.

-

Focus on how effective organizations are at achieving their stated

goals instead of assuming that “the Harvard Center for Making Things

Better” is actually making things better even though it’s in the name

and it has fancy affiliations and lots of money.

-

Celebrate institutions and individuals who support weird

institutional experiments.

-

Create ways for people to learn about culture and the humanities

outside of universities.

- Simply stop expecting universities to be the solution to society’s ills.

On top of interventions to clear the way for new institutions, unbundling needs people to build genuinely new ways of fulfilling roles that we have heaped onto universities. Think of how Oracle succeeded by specializing in building databases that were just one part of IBMs business and a thousand other examples. These institutions will take many forms: from informal groups to high-growth startups to open-source projects to ambitious nonprofits.

Unbundling pre-commercial technology

While it’s not clear what roles should or should not be bundled together, I am confident that pre-commercial technology research can happen much more effectively in a new institution. Academia’s core structures and incentives revolve around education and scientific inquiry, not building useful technologies.

Quick aside on definitions : ‘Pre-commercial technology research’ is a nebulous term I’m using for work that is intended to create useful technologies but is not a good investment (yet or ever). To a large extent, this is synonymous with work to bridge the ‘ Valley of Death ’ between initial work to create a technology and making it into a commercial product. Note that there is lots of research that isn’t pre-commercial technology: academia can be good for many inquiries into the nature of the universe. (For a much more thorough explanation of pre-commercial technology research see Section 1. )

Let’s look at a specific situation: trying to spin up a program to unlock technology for pulling CO2 and methane out of the atmosphere and turning it into useful complex stuff. (This is a thing we are trying to do at Speculative Technologies, so this isn’t just a made-up example.) If we were able to do this, it would enable us to make all the great things we make out of petroleum (plastics, drugs, commodity chemicals) but without the petroleum. This work makes no sense as a startup because it requires a big chunk of up-front research but the chemicals are incredibly low margin. The actual work entails things like finding and engineering the right enzymes, figuring out how to get them working in a continuous flow system, etc. There are about five independent projects that need to happen, each requiring specialized skills and millions of dollars of equipment. Unless you want to buy all that equipment and hire all those specialists, the only place you can turn to is university labs.

But then here’s a laundry list of things you then need to do just to get a program like this going:

-

First, you need to negotiate with five different professors, none of

whom are actually going to be doing the hands-on work and will probably

spend at most 20% of their attention managing the project. Those

professors’ incentives are to get tenure, fund their labs, graduate

their students, and publish discrete chunks of work that their community

finds new and interesting, in roughly that order. Maybe they have

ambitions of doing work that could turn into a company, but none of

those priorities are “make the bigger system work.”

-

Once things are hashed out with the professors, you need to

negotiate with the universities. Chances are that you will need to

negotiate with several different parts of each university — the tech

transfer office, the grants office, and the general counsel. This can

take months and thousands (tens of thousands?) of dollars of legal fees.

The Universities’ incentives are to avoid being sued, to follow policy,

and get paid – in that order. Many universities default to charging more

than 30% overhead (which means that the university gets 30 cents for

every dollar that goes to research), and demand ownership over any

resulting intellectual property.

- Assuming you can actually get everything settled with five universities and five professors, you then need to wait for the professors to hire the grad students or postdocs who will do the actual work. If you’re lucky, a professor has just taken on someone who doesn’t have a project, but it might take until the next crop of grad students is admitted in almost a year.

Once the work finally starts months or years later:

-

You will constantly need to course-correct teams that want to go

down the “most interesting” path. A grad student may discover that an

enzyme they were trying to get to regenerate ATP 10% more efficiently

(which is critical for the whole system to hit a reasonable efficiency)

exhibits some strange behavior and spend weeks down a rabbit hole

figuring out why and then writing up a paper about it. There’s nothing

wrong with pursuing things because they’re interesting! But it can be

detrimental to bigger goals when you’re creating new technology in

coordination with a number of other groups.

- You’ll inevitably need to adjust timelines for a thousand possible reasons: graduate students graduate, postdocs get permanent positions, or professors shift focus for months at a time to get a paper out, teach, or serve on committees.

Now imagine that the projects have hit their goals and you want to actually get the technology out into the world to have an impact:

-

The graduate students who actually did the hands-on work need to get

their professor’s permission to continue it outside of the

university.

-

The professor (who spent less than 20% of their time on the program)

is unlikely to leave the university to join the company, but they are

likely to want a significant chunk of equity and the ability to have a

say in the company’s operations.

-

The university’s technology transfer office will want their pound of

flesh: either large licensing fees or a chunk of equity in any resulting

companies. Even if you contract with the university to have access to

the technology, the university still owns it and any organization that

uses it will need to license it from them. The technology transfer

office will probably demand upwards of 10% or more equity in a company.

Normally, you sell ownership stake in a company for money you can use to

build the company, so having a chunk of it gone from day one without any

money in the bank makes it that much harder to raise money. Unlike VCs,

the people at the tech transfer office don’t actually make more money if

the company becomes super valuable, so they don’t have strong incentives

to see spin-out companies actually succeed.

- Now repeat that process several times. Remember, the work happened across several different universities and labs because most technologies are systems with different components requiring different technical expertise that all need to work in concert.

Suffice it to say: we have not succeeded in building technology for pulling CO2 and methane out of the atmosphere and turning it into useful complex stuff. There are versions of this story across so many of the roles that universities have taken on.

This is what happens when we allow the world’s most bureaucratic institutions to gatekeep the future of civilization.

More abstractly, here’s a non-exhaustive list of the ways that academia is misaligned for pre-commercial technology research:

-

Training academics and building technology effectively are

at odds.

Having trainees – graduate students and postdocs – do

the majority of the work on the knowledge frontier is great for their

education (and the pocketbooks of everybody involved except the

trainees) but it is at odds with building useful technology. A company

where most of the code was written by interns would quickly go out of

business, even if they were being supervised by senior engineers. In

addition to their inexperience, graduate students have naturally high

turnover; they can take years to get up to speed and tacit knowledge is

constantly lost. Furthermore, academic labs have no incentive to

increase productivity because a lot of research funding is earmarked for

training: productivity comes from investing in technology to decrease

the number of people you need to do work, which isn’t something you do

when you have a lot of funds earmarked for heavily subsidized

labor.

-

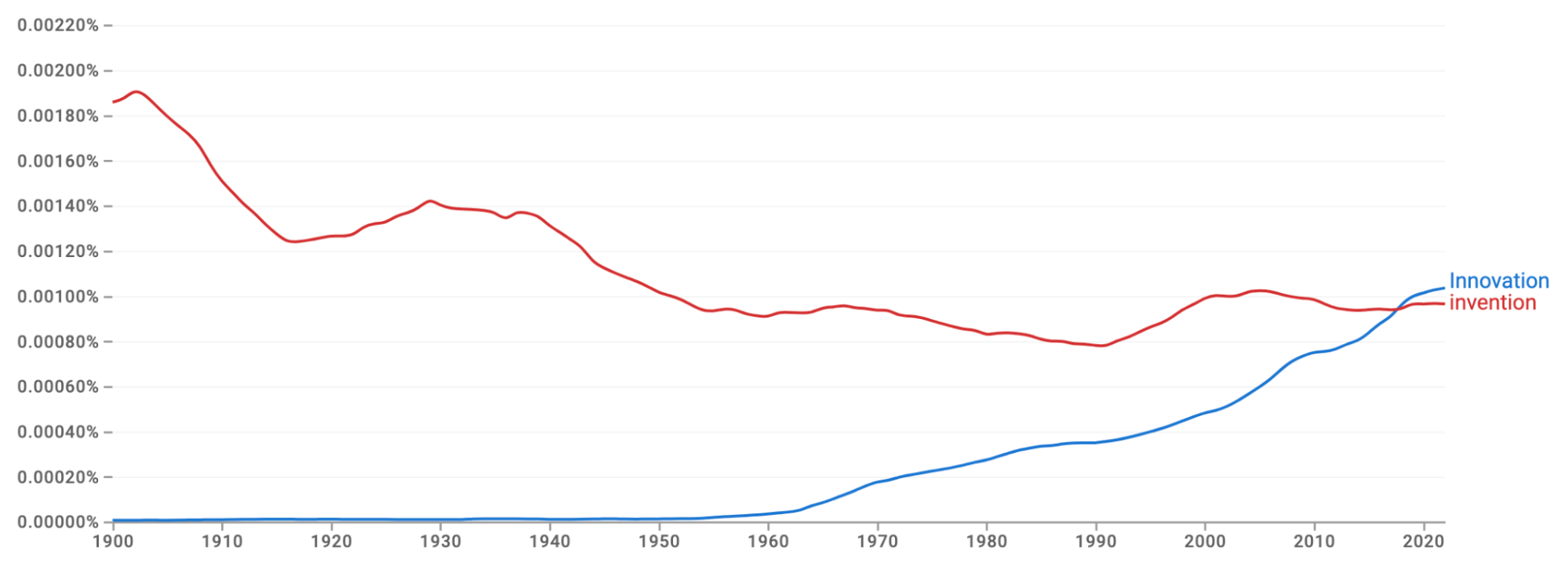

Academia incentivizes new discoveries, not useful

inventions.

Academic

incentives are built around scientific inquiry

to discover the

secrets of the universe. These incentives are direct descendants from

natural philosophy. As a result, academia incentivizes novelty,

discovery, and general theories over usefulness. Paper and grant

reviewers ask “Is this idea new? Does it generalize?” not “Does this

scale or work well in the specific case it’s built for?” Academic

incentives are great for discovering the secrets of the universe, but

not for building powerful technology. Often the work that makes a

technology actually useful is just elbow grease and trying tons of

things out in a

serious

context of use,

long after you’ve discovered the new thing. This

work is something no tenure committee or journal cares about.

-

Academic incentives make large teams hard.

Successful professors and grad students need to build a personal brand

by being first (or last, depending on the field) authors on papers.

Awkwardly, there can only be one first author.

1

Large academic

teams can certainly happen,

but it requires pushing against all the

incentives the system throws at you. Building useful technologies

requires teams with many specialties working together without worrying

about who gets the credit.

-

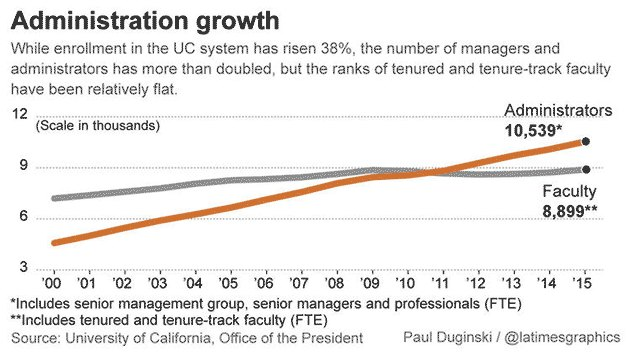

Universities have become a bureaucratic mess.

Arguably, universities have become the most bureaucratic institutions in

the world; I know several people who have worked in both government and

name-brand universities; they say things move more slowly and it’s

harder to get things done at the latter. A back of the envelope

calculation suggests that there are

as many

non-medical administrators at Harvard as researchers.

Large

bureaucracies make it hard to move fast and do weird things, both of

which are critical for creating new technologies.

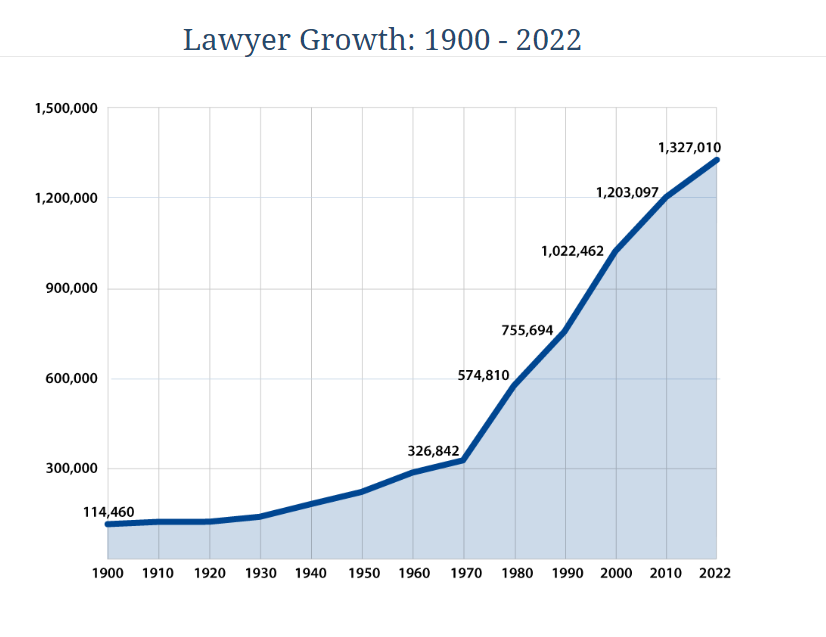

- University tech transfer offices add massive friction to spinning out technologies. With a few rare exceptions, universities own any IP that is created within their walls (even when funded with government or corporate money). This arrangement isn’t necessarily bad (companies own the IP their employees create as well) but to get out into the world, that IP needs to go through tech transfer offices that have few incentives to actually help the technology succeed. Instead, tech transfer offices can drag out negotiations over draconian licensing terms for months or years. It would be one thing if universities depended heavily on tech transfer to support their other activities, but only 15/155 tech transfer offices in the US are profitable and even Stanford only made $1.1B over four decades in licensing revenue. At a global tech transfer summit a few years ago, only two of the top 30 tech transfer professionals at the meeting said generating revenue was a goal of the tech transfer office. Instead, 30/30 said the goal was the poorly-defined “economic development.” It’s a longer discussion, but the best way to achieve that goal may be to just shut down the office. Profit isn’t everything, but it should be a major focus of an organization whose job is to spin out companies. These are not serious people.

(The list goes on but you get the idea)

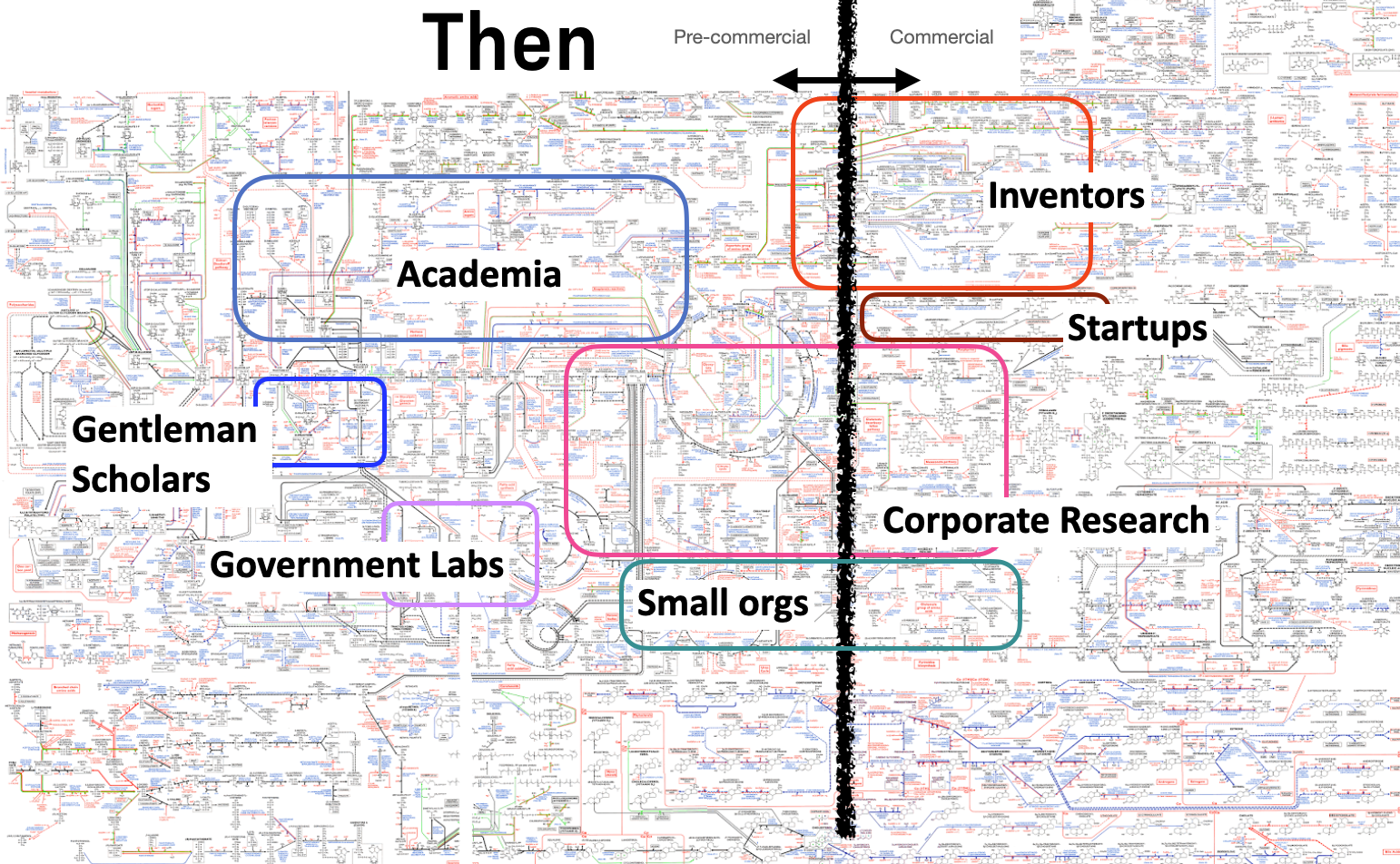

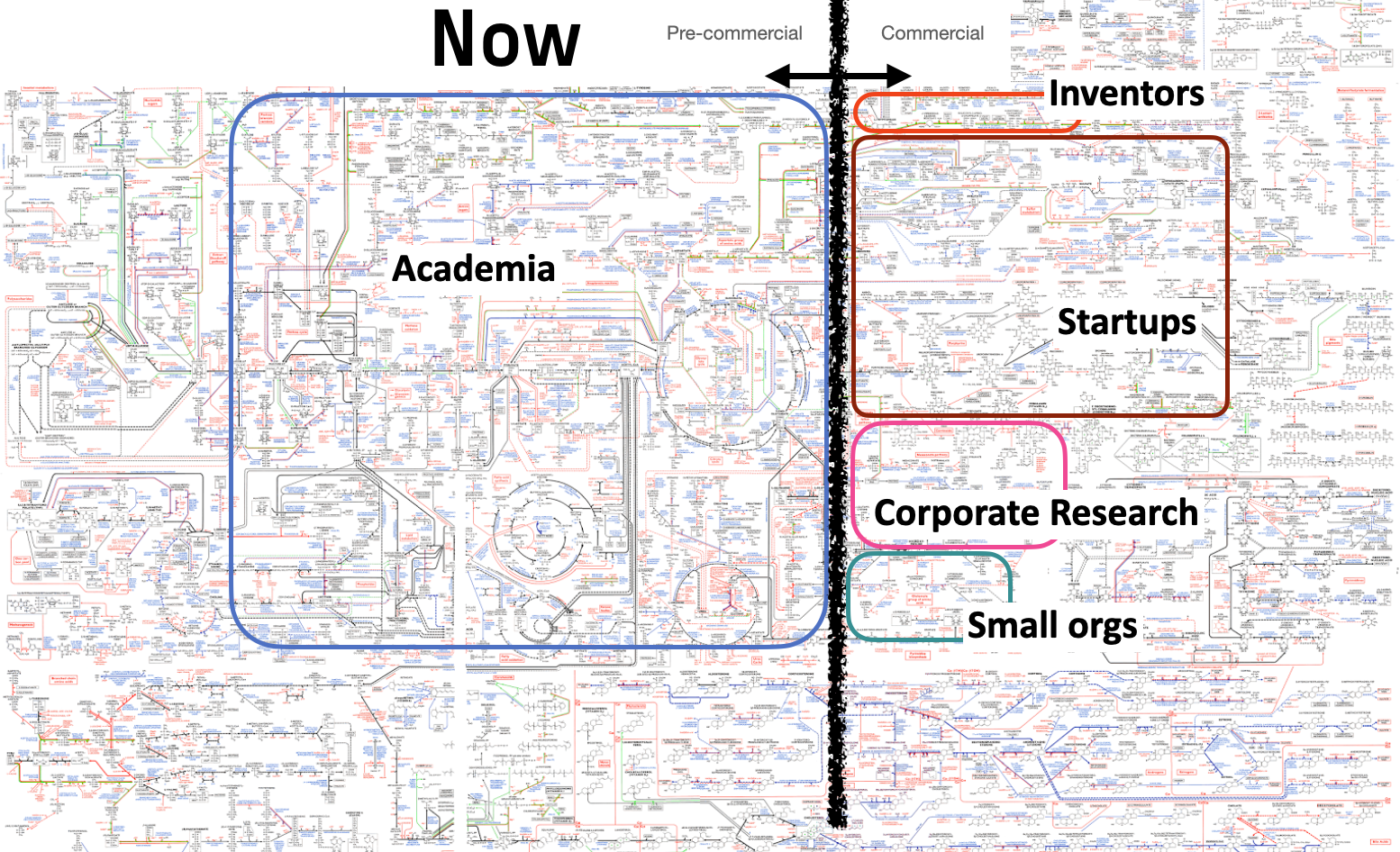

The poor fit between academia and pre-commercial technology research wouldn’t be such a problem if academia hadn’t developed a monopoly on pre-commercial research over the course of the 20th century. Pre-commercial technology research once happened in the basements of inventors like Goodyear or Tesla, high-margin research contractors like BBN, and industrial labs like GE Research, Bell Labs, or Dupont Research. How academia gradually took over this critical ecosystem niche and why we can’t just “RETVRN” is a complicated story of both government and market forces, pressures towards specialization and efficiency, and increasing technological complexity. The gutting of corporate research, disappearance of inventors, and decline of small research orgs is an involved story for another time.

In the world of laundromat-coffeeshop-bookstore-karaoke bars, the academic monopoly is like looking around the neighborhood and noticing “huh, there used to be a bunch of stand-alone karaoke bars but the big coffeeshop-laundromat chain came through, acquired all of them, and installed espresso machines and dryers.”

What about non-university research institutions? There are lots of non-university research organizations ranging from the Broad Institute to the Naval Research Lab. Without students, it’s natural to expect them to avoid many tensions that hamstring universities. While they do remove teaching loads and some bureaucracy, the reality is that they’re still subject to many of the incentives that make universities a poor place for pre-commercial technology research. Many organizations are attached to universities at the hip — it is common for non-university research organizations to have university-affiliated professors as primary investigators or be actively administered by a university, like many of the national labs. Even within research organizations that have no affiliation with a university, researchers often see academics as their primary peers; in many cases, the role of professor is still the highest status thing one can aspire to. As a result, people still play the same games as academia – scoring points for novelty, discovery, and papers.

The reality is that it’s very hard for individuals and organizations to avoid interfacing with academia if they have an ambitious pre-commercial technology idea. If you want to work on a technology idea that isn’t yet a clear product, you need to either be in or partner with academic organizations for equipment, skills, or status. That, in turn, means slogging through all those adverse incentives and bureaucracies.

Many people have (correctly) noticed that many of the problems in our research ecosystem are driven by incentives: from p-hacking and fraud to citation obsession, incrementalism, and intense bureaucratization. But most of the solutions unavoidably involve academia: new institutes are housed at universities or have principal investigators (PIs) who are also professors; new grant schemes, prizes or even funding agencies ultimately fund academics; the people joining new fields or using new ways of publishing are ultimately still embedded in academia.

Academia’s monopoly means that shifting incentives in the research ecosystem is incredibly hard because most interventions don’t change who is doing the actual work and what institutions they work for. Changes to the research ecosystem are bottlenecked by where the work is done.

Most new research orgs still depend on people working in university labs to do the hands-on research because there are many reasons for working with universities: universities have a lot of (often underused) rare or expensive equipment; universities are staffed by graduate students and postdocs, who provide cheap labor in exchange for training; universities are where the people with experience doing research are. Spinning up a new research institution from scratch is slow and expensive. Hiring people full-time can lock you into research projects or directions.

The advantages to working with universities were why when we first started Speculative Technologies, we sought to emulate DARPA’s use of exclusively externalized research. However, we’ve come to realize that it’s incredibly hard to do work that doesn’t have a home in existing institutions by working exclusively through existing institutions.

In retrospect, “duh.”

The academic monopoly on pre-commercial research has created a bonanza of research misfits : people and technologies with incredible potential who are poor fits for the academic system. These are the same people and technologies that historically have unlocked new industries and material abundance: Many Nobel prize winners and world-changing technologists have asserted that that they wouldn’t have been able to do the work they did in today’s system.

Yes, we continue to invent, but how many Kaitlin Karikós didn’t persevere under similarly adverse circumstances. Is it possible that ever-decreasing research productivity is not because ideas are getting harder to find, but that we just keep injecting more friction into the system?

Speculative Technologies needs to be a home for these misfits. Research misfits need an institution that drives towards neither papers nor products, but instead focuses on building useful, general-purpose technologies without being wedded to a specific way that they get out into the world. Sometimes papers are best, sometimes products are best, sometimes none of the above.

Building a home for research misfits

There are at least two ways we are thinking about building a home for these misfits. (Note that these are things we’re actively working on making a reality – if you want to help please let us know!):

-

A Hardcore Institute of Technology.

Counterintuitively, the way to train hardcore scientists and

technologists is not to build yet another school; instead, you start by

building a research lab for experienced misfits that is working on real,

serious problems. You then start bringing in “journeymen” who have some

training or experience. A bit later you bring in “apprentices.” These

folks are the equivalent of undergrads and grad students, but there’s no

grades, no degree, and no accreditation; just experience and trial by

fire. This would be like the navy seals of technical training – you know

that anybody who comes out of this place is the best of the best. Think

about it: portfolios are starting to matter far more than credentials

and certain companies are now far better indicators of quality than

schools – a successful tour of duty at SpaceX has more signal than a

degree from MIT.

- A crucible for new manufacturing paradigms. The US industrial base has been hollowed out. The way to manufacture things cost-effectively in the US won’t be to try to out-China China – they’ve gone so far down the learning curves with current paradigms. The way you compete with an entrenched player is to change the game and leapfrog paradigms: minimills were a new paradigm for manufacturing steel that at first produced an inferior product, but new technology allowed it to take huge market share from traditional steel manufacturing and do it in new places. Similarly, cell phones enabled internet access in Africa and other places without requiring desktops; digital payments leapfrogged credit cards, submarines leapfrogged battleships, the list goes on. Successful American manufacturing in the 21st century won’t look like American manufacturing in the 20th; it will be based on entirely new paradigms. Creating these new paradigms requires more than just startups creating point solutions – it needs systems-level research happening in tight communication with existing industry. In other words, an ambitious industrial research lab focused on building useful, general-purpose technologies and getting them into the world.

All of this requires physical spaces decoupled from the constraints of academia, startups, governments and big corporations. A place for people with brilliant ideas to build atom-based technologies that won’t necessarily work as high-margin startups; to start projects that don’t necessarily fit into a specific bucket. These projects could evolve smoothly into bigger programs, baby Focused Research Organizations, or nascent companies; all united by a common mission to unlock the future.

Conclusion

Speculative Technologies ’ core mission is to create an abundant, wonder-filled future by unlocking powerful technologies that don’t have a home in other institutions. Since we launched in 2023, we’ve learned a lot about what is broken in our research ecosystem and how we can best execute on that mission.

One big thing we have realized is the blunt fact that over the past 50 years, universities have developed a near-monopoly on many types of research and, like many monopolies, they are not particularly good at all of them.

Pre-commercial technology research is clearly not the only thing that needs to be unbundled from universities — the world needs new institutions for doing everything from credentialing to vocational training to discovering the secrets of the universe. It is the place to start because it’s one of the poorest-fitting stones in the wall pieces and the upside of doing so is so large.

If you care about universities : Unbundling will not kill academia and universities, but save them. Ask any professor and they will tell you that they have at least 10 jobs, each of which comes at the cost of the others. In large part, these roles are downstream of the laundry list of roles bundled into a single institution. If you ask different people what the role of a university or professor is, you’ll get many conflicting answers. Unbundling at least some of the roles would let the teachers teach, the inventors invent, and the scientists discover.

Pragmatically, it’s also a bad bet to wager on destruction of one of the longest-lived human institutions. Harvard was here long before the United States and I will bet that it will be here long after. Instead, unbundling will put competitive pressure on universities to up their game and focus on what they’re best at.

Regardless of your position, from deep institutionalist to radical revolutionary, it’s clear that something needs to happen: unbundling pre-commercial technology research is a high bang-for-your-buck start.

Every week, I run into misfits with brilliant, potentially transformative technology ideas that are poor fits for either academia or startups. Giving those people and ideas a home and the resources to execute on those ideas is how we’re going to play basketball with our great-grandchildren, jaunt to the other side of the world for an afternoon, and explore the stars.

1. Changes to the research ecosystem are bottlenecked by where the work is done

Our ability to generate and deploy new technologies is critical for the future. Why new technology matters depends on who you are: economists want to see total factor productivity increase, politicians want a powerful economy and military, nerds want more awesome sci-fi stuff, researchers want to be able to do their jobs, and everybody wants their children’s material life to improve.

Uncountable gallons of ink and man-hours of actual work have been poured into improving this system — from how papers are published and how grants are made to creating entirely new centers and accelerators. But most of these efforts to improve the system go to waste.

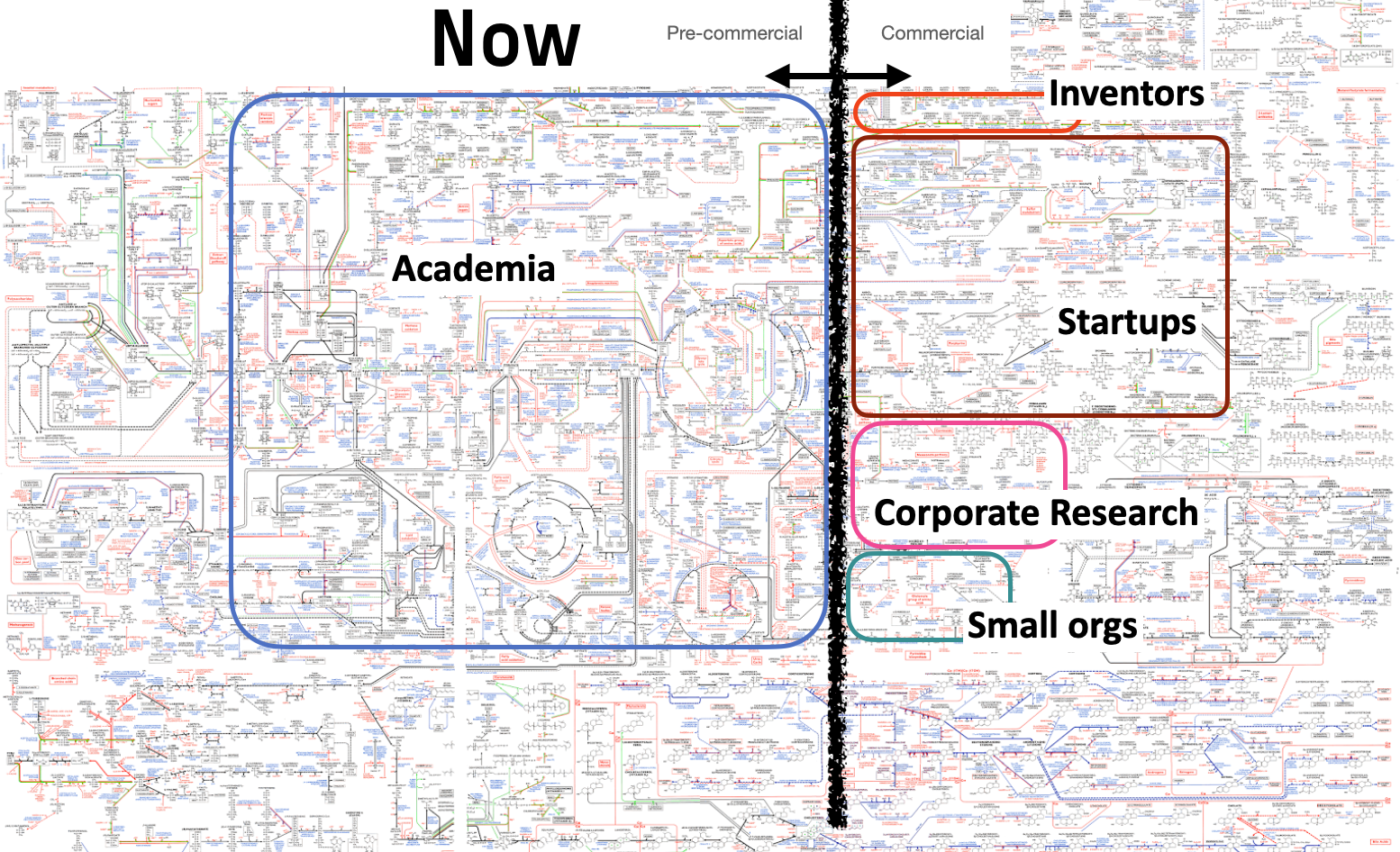

It is almost impossible to change a system when the people who are doing the actual work — the inventing and discovering — are still heavily embedded in the institutions that created the need for systemic improvement in the first place. To unpack that:

-

Universities (and academia more broadly) are taking over more and

more work that doesn’t have immediate commercial applications. In other

words,

academia has developed a monopoly on pre- and non-commercial

research.

-

The friction and constraints associated with university research

have increased over time.

- Combined, points #1 and #2 mean that you won’t be able to drastically improve how our research ecosystem works without drastically changing the university or building ways to fully route around it.

There are many reasons for doing research at universities. Universities have a lot of (often underused) equipment that is rare or expensive – there are a shockingly large number of pieces of equipment or tacit knowledge that only exist in one or two places in the world. Universities have graduate students and postdocs, who provide cheap labor in exchange for training. Perhaps most importantly, universities are where the people with experience doing research are: spinning up a new research location from scratch is slow and expensive; hiring people full-time locks you into research projects or directions.

Both for these concrete reasons and because it’s the cultural default, most efforts to enable pre-commercial research involve funding a university lab, building a university building, or starting a new university-affiliated center or institute. But doing so severely constrains speed, efficiency, and even the kind of work that can be done. (You can jump back to the executive summary for a blow-by-blow of how these constraints play out.)

Behind closed doors, even people in organizations like DARPA or ARPA-E will acknowledge that the frictions imposed by working via academic organizations limit their impact. Despite large budgets and significant leeway about how to spend them, the law requires ARPA program leaders to act through grants or contracts to existing institutions instead of hiring people directly. Those rules almost inevitably mean working with universities. Most new research organizations are no different: they still depend on people working for universities and in university research labs to do the actual hands-on work.

We often think of research as creating abstract knowledge, but the

reality is that a lot of that knowledge is

tacit

– it lives only in people’s heads and hands. To a large extent,

technology

is people.

If those people are working in an institution that

judges them on novelty, they are going to build technology that is

novel, not necessarily useful. If they are working in an institution

that judges them on growth, margins, or relevance to existing products,

they’re going to tune technology in those directions, rather than

towards impact.

An aside: how technology happens and pre-commercial technology work

To really understand why a university monopoly is so bad for our ability to create and deploy new technology, it’s important to briefly unpack how technology actually happens and why there’s a big chunk of that work that isn’t done by rational, profit-seeking actors like companies.

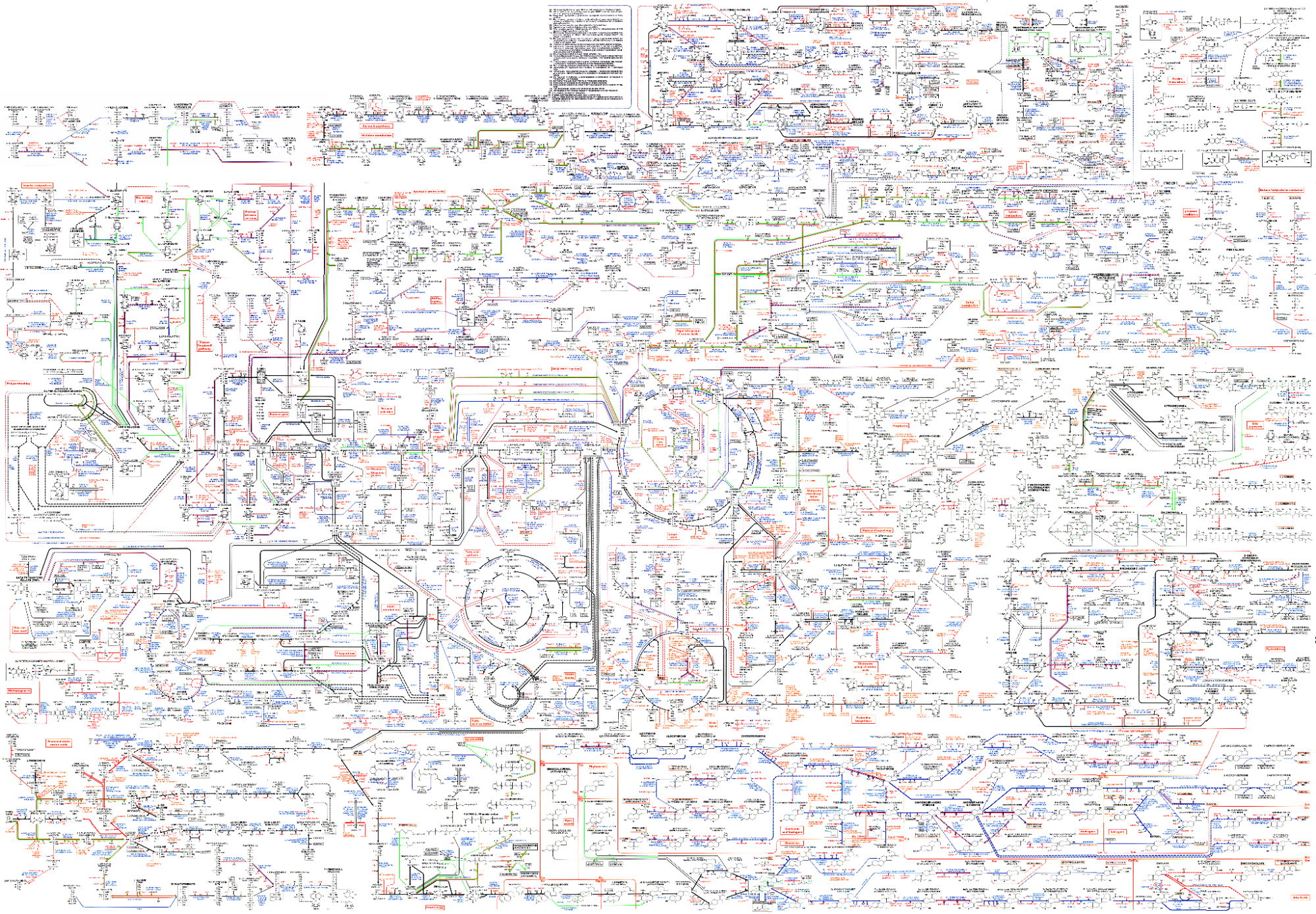

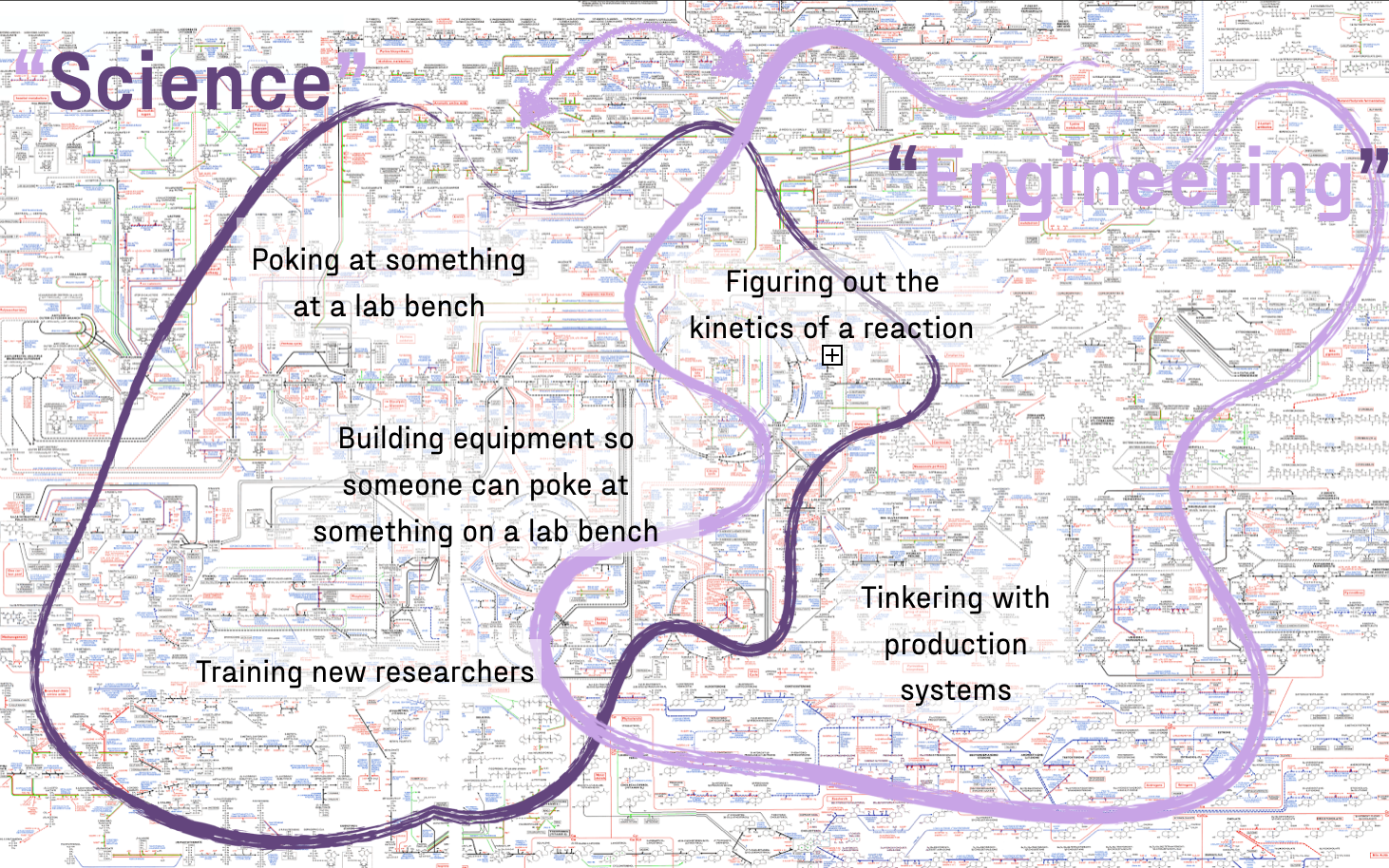

How does technology happen?

Many people (even very smart, technically trained ones!) imagine that the way new technology happens is that some scientist is doing “ basic science ” — say, measuring the properties of Gila monster saliva because Gila monsters are freaking sweet — when all of a sudden they realize “aha! This molecule in Gila monster saliva might be very useful for lowering blood sugar!” The scientist (or maybe her buddy) then figures out how to make that molecule work in the human body with the idea that it will be a useful drug, ie. “ applied science .” Once that works they figure out how to package it up as a drug, get it FDA approved, and start selling it, ie. “ development ”. Once the drug is out in the world, people discover new applications, like suppressing appetite. This is indeed what happened with GLP-1 inhibitors.

That is not actually how most technology happens.

In reality, the process of creating new technology looks more like how the transistor (that drives all of modern computing) came to be: In the 1920s, Julius Lilienfeld (and others) realize that it would be pretty sweet if we could replace fiddly, expensive vacuum tubes with chunks of metal (ok technically metalloid). Several different groups spend years trying to get the metalloid to act like a vacuum tube and then realize that they’re getting nowhere and probably won’t make any headway just by trying stuff – they didn’t understand the physics of semiconductors well enough. Some Nobel-prize-winning physics later, the thing still doesn’t work without some clever technicians figuring out how to machine the metalloid just right. The “transistor” technically works then, but it’s not actually useful — it’s big, expensive, and fiddly. It takes other folks realizing that, if they’re going to make enough of the transistor to actually matter, they’ll need to completely change which metalloid they’re using and completely reinvent the process of making them. This process doesn’t look anything like a nice linear progression from basic research to applied research to development.

Technology happens through a messy mix of trying to build useful

things, shoring up knowledge when you realize you don’t know enough

about how the underlying phenomenon works, trying to make enough of the

thing cheaply enough that people care, going back to the drawing board,

tinkering with the entire process, and eventually coming up with a thing

that has a combination of capabilities, price, and quantity that people

actually want to use it. Sometimes this work looks like your classic

scientist pipetting in a lab or scribbling on a whiteboard, sometimes it

looks like your soot-covered technician struggling with a giant crucible

of molten metal, and everything in between. All this work is connected

in a network that almost looks like a metabolism in its complexity. (All

credit for this analogy goes to the illustrious Tim Hwang.)

Ultimately, this work needs to culminate in a product that people beyond the technology’s creators can use: someone needs to buy manufactured technology eventually in a money-based economy. But the work to create a technology is often insufficient for a successful product: you need to do a lot of work to get the thing into the right form factor and sell it (or even give it away and have people actually use it). Very few people want to buy a single transistor or an internet protocol: they want a GPU they can plug straight into a motherboard or a web application.

(This is a speedrun of a much larger topic: if you want to go deeper, I recommend Cycles of Invention and Discovery and The Nature of Technology: What it is and How it Evolves as a start.)

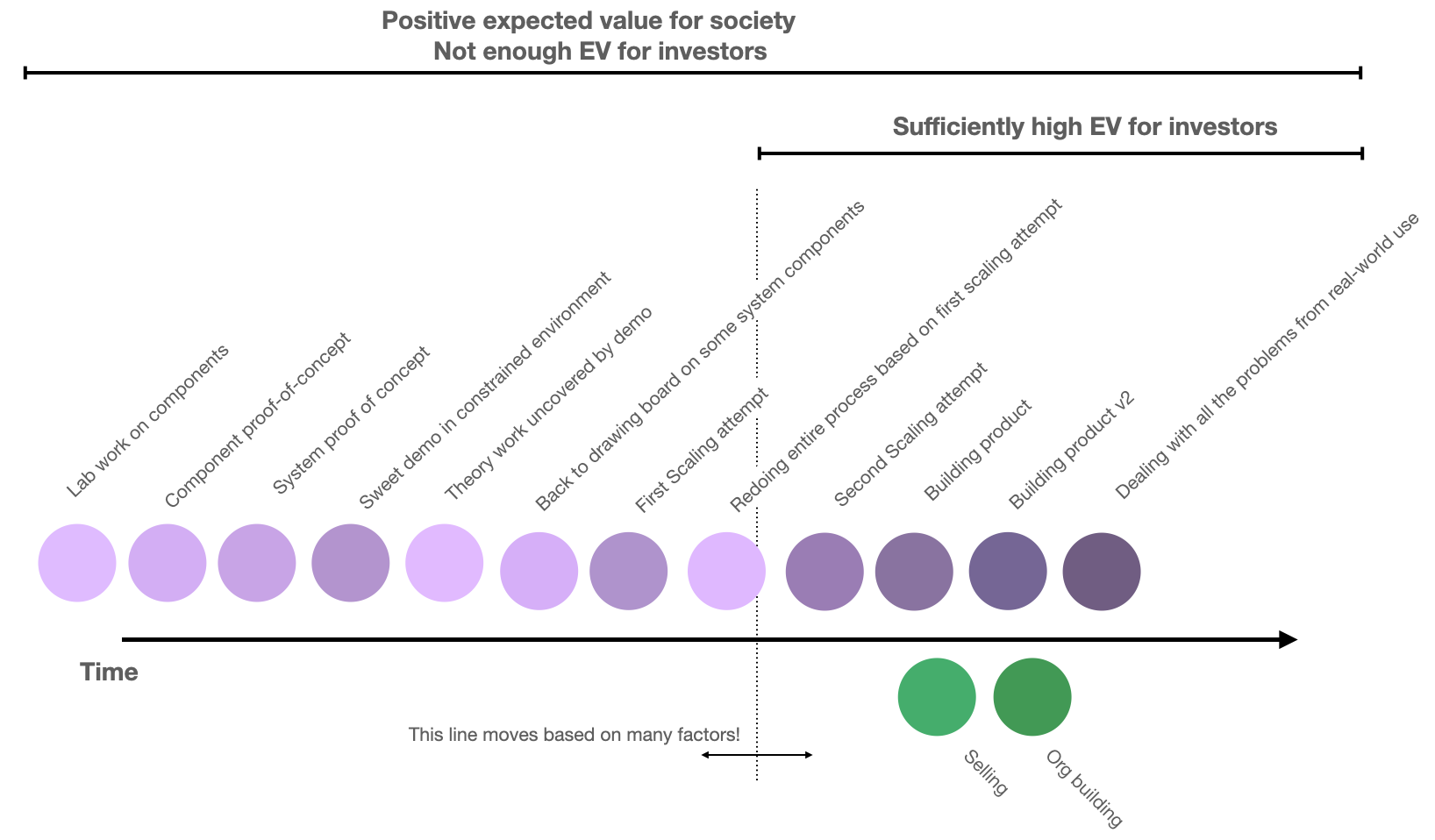

Some of the work to create technology is a poor commercial investment (pre-commercial technology work)

Imagine putting all of the work to create a useful technology on a timeline. If you draw a line at some point in time, you can ask “what is the expected value for an investor of all the work after this point in time (assuming all future work is funded by investment and revenue)?” There will be some point in time where all future work will have a sufficiently large expected value that funding it will be a good investment. All the work before that point is pre-commercial technology research. In other words, pre-commercial technology research is work that has a positive expected value, but its externalities are large enough that private entities cannot capture enough value for funding that work to be a good investment.

Of course, reasonable people will disagree strongly over where that line is. Some people would argue that any valuable work should be a good investment – these folks would put the line at t=0. Others believe it’s after a successful demo, and still others believe it’s at the point where there’s a product to sell.

Of course, technology research doesn’t create a fixed amount of value that then gets divvied up between the public and individuals or organizations. A technology’s impact can vary based on where in its development investors and creators start expecting it to be a good investment and start working to capture value by starting companies, patenting, and selling products. Arguably, Google would have had far less impact if Larry Page and Sergey Brin had just open-sourced the algorithm instead of building a VC-backed startup around it; at the same time, transistors would arguably had far less impact if AT&T had imposed draconian licensing terms on them or not licensed them at all.

Frustratingly, there is no straightforward way to find the “correct” line between pre-commercial and commercial technology work in any given situation. It’s both wrong to say “everything should be open-source” and “any valuable technology work should be able to both make the world awesome and its inventors obscenely rich at the same time.” There is no easy answer to “ When should an idea that smells like research be a startup? ” or the related question, “When should a large company invest in technology research?”

In other words, the line between pre-commercial and commercial work is fuzzy and context-dependent. It depends heavily both on factors intrinsic to a technology and extrinsic factors like regulations, transaction costs, markets, and even (especially?) culture.

These factors change over time. In the late 19th century, George Eastman could start making camera components during nights and weekends in his mother’s kitchen with the equivalent of $95k (in 2024 dollars) of cash borrowed from a wealthy friend. He used that revenue to build more components, expand the business, eventually go full-time, and invent roll film. Today, some combination of overhead and development costs, combined with expectations around uncertainty, scale, timelines, polish, returns and other factors means that it can take hundreds of millions or billions of dollars to bring a product to market.

In the early 20th century, the stock market was basically gambling – most investors ended up breaking even or negative; in the late 20th century, the stock market reliably returned more than 10% annually. In the early 20th century, vehicles breaking down regularly (or exploding!) was a regular occurrence; now a plane crash is an international incident. Many of these changes are good, but they add up to a world where more technology work falls on the pre-commercial side of the pre-commercial/commercial divide.

This story skips a lot of details, counterarguments, and open questions. One should certainly ask “what would it take to make more technology work commercially viable?” It’s likely there are new organizational and financial structures, friction reductions, and cultural changes that could make more technology work commercially viable. But as it stands, pre-commercial technology work is more important than ever. At the same time, it is increasingly dominated by a single institution.

Academia has developed a monopoly on pre- and non-commercial research

In the 21st century, it’s almost impossible to avoid interfacing with academia if you have an ambitious pre-commercial research idea. This goes for both individuals and organizations: if you want to do ambitious pre-commercial research work, academia is the path of least resistance; if you want to fund or coordinate pre-commercial technology research the dominant model is to fund a lab, build a building, or start a new center or institute associated with a university.

A quick note on definitions : Academia is not just universities — Modern academia is a nebulous institution characterized by some combination of labs with PIs being judged on papers and labor being done by grad students.

You can think of academia as asserting its monopoly in four major areas (ordered in increasing levels of abstraction): physical space, funding, mindsets+skills+incentives, and how we structure research itself.

Physical space

If you have a project that requires specialized equipment or even just lab space, the dominant option is to use an academic lab. Companies with lab spaces rarely let anyone but their employees use them. You could get hired and try to start a project but companies have an increasingly shorter timescale and tighter focus that precludes a lot of pre-commercial research.

There are commercial lab spaces but they are prohibitively expensive without a budget that is hard to come by without venture funding or revenue. (That is, it’s hard to come by in the context of pre-commercial research!) Furthermore, most grants (especially from the government) preclude working in a rented lab because they require you to prove that you have an established lab space ready to go. The way to prove that you have that is a letter from an existing organization. And the only existing organizations that would sign that letter are universities.

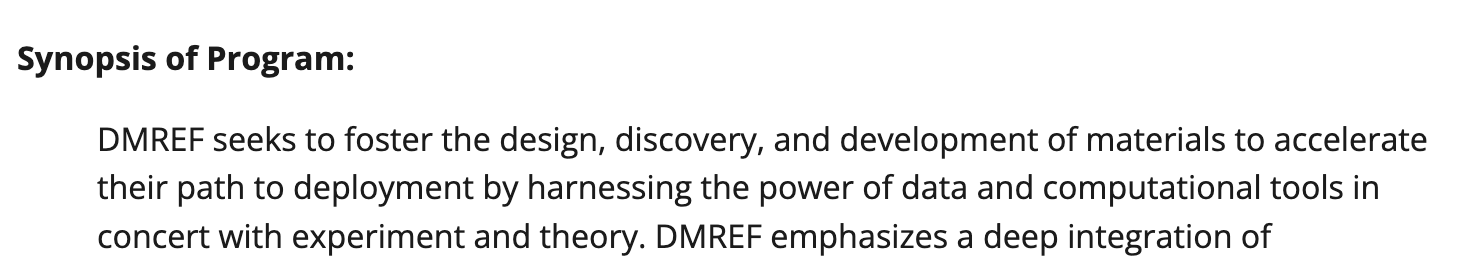

Funding

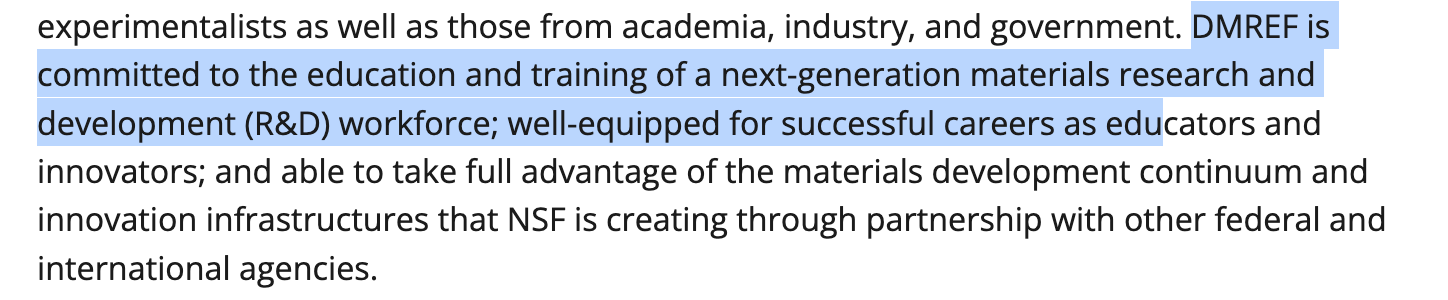

Many research grants are explicitly for people associated with

universities and have earmarks for funding graduate students.

This

$40 million funding call for new ways to create materials is one of many

examples

of both government and nonprofit funding that is explicitly

only for professors or institutions of higher learning.

Two main reasonable-at-the-time factors created this situation:

-

Government research funding is explicitly dual-purpose: it’s both

meant to support the actual research but also to train the next

generation of technical talent. This combination made more sense before

universities took on the role of “technology producing engine.”

- Many funders don’t have the bandwidth to evaluate whether an individual or organization is qualified so they fall back on heuristics like “is this person a tenure-track professor at an accredited institution?”

As a result, many funding pathways are inaccessible for non-academic, non-profit-maximizing institutions. Restricting non-academic institutions’ ability to access funding further solidifies academia’s monopoly.

Mindsets, Skills, and Incentives

Most deep technical training still happens at universities. But a PhD program doesn’t just build technical knowledge and train hands-on skills; it inducts you into the academic mindset. It’s certainly possible to do a PhD without adopting this mindset but it’s an uphill battle. Our environment shapes our thoughts! Institutions shape how individuals interact! So we end up with a situation where everybody with deep technical training has been marinating in the academic mindset for years.

The dominance of the academic mindset in research has many downstream effects: some are pedestrian, like the prevalence of horrific styles in technical writing; others are profound, like prioritizing novelty as a metric for an idea’s quality.

The academic system also shapes the types of skills that these deep technical experts develop; among other things, it makes them very good at discovery and invention, but not necessarily scaling or implementation.

The academic mindset warps incentives far beyond universities. Researchers in many non-university organizations still play the academic incentives game both because they were all trained in academia and because “tenured professor at a top research university” is still the highest-status position in the research world. As a result, academic incentives still warp the work that people do at national labs, nonprofit research organizations, and even corporate research labs.

The structure of research

At the most abstract, academia has a monopoly on how society thinks about structuring research. Specifically, that the core unit of research is a principal investigator who is primarily responsible for coming up with research ideas and runs a lab staffed by anywhere from a few to a few dozen other people. This model is implicitly baked into everything from how we talk about research agendas, to how we deploy money, to how researchers think about their careers.

Academia’s monopoly on the people actually doing benchwork means that it’s very hard to shift incentives in the research ecosystem; most interventions still involve work done by people in the academic system. New institutes are housed at universities or have PIs who are also professors; new grant schemes, prizes or even funding agencies ultimately fund academics; the people joining new fields or using new ways of publishing are ultimately still embedded in academia.

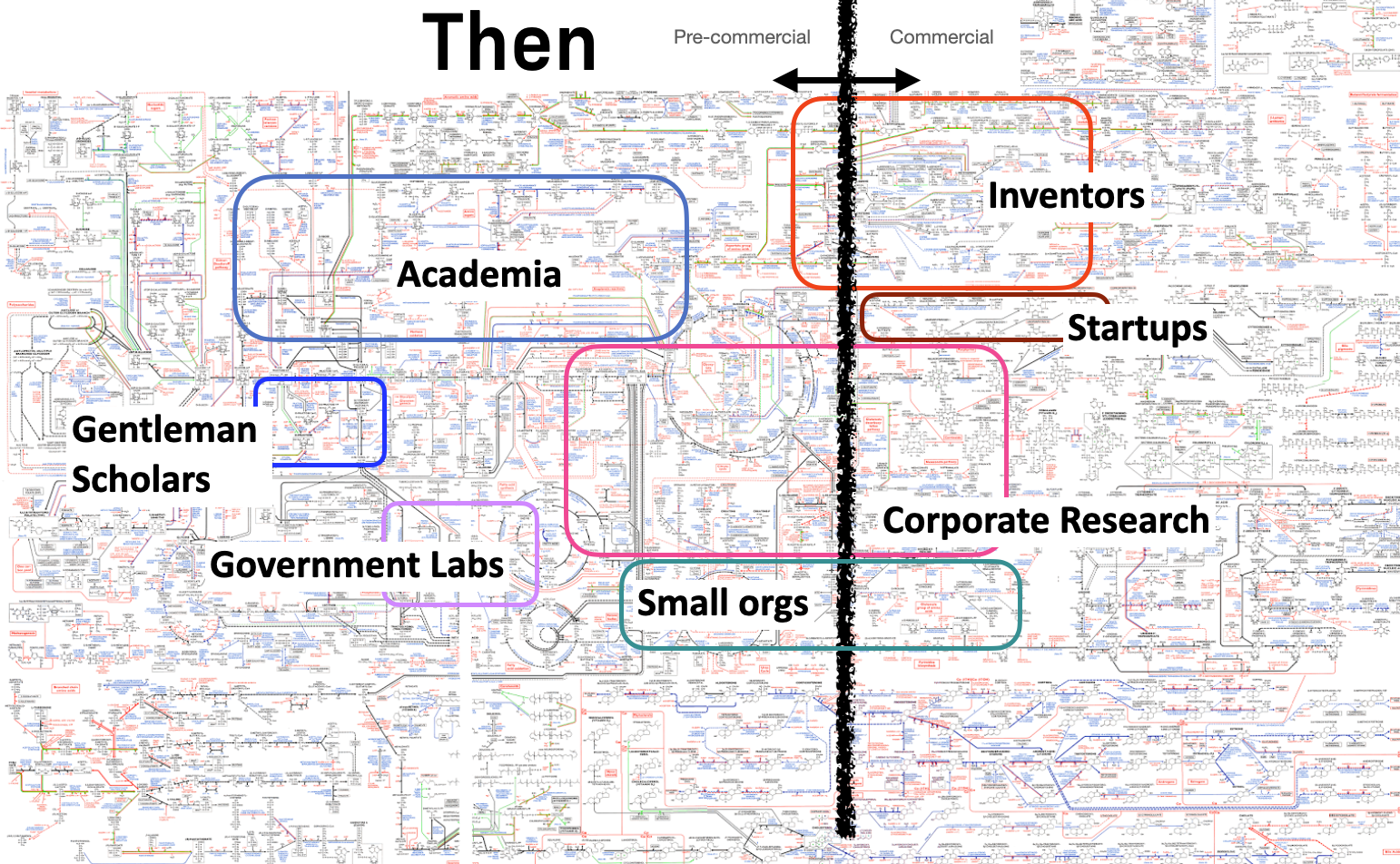

In the not-so-distant past, non- and pre-commercial research happened

across a number of different institutions with different sets of

incentives: corporate research, small research organizations like

BBN,

inventors in

their basements, gentlemen scholars, and others.

It’s funny that at the same time that pre-commercial research has become more important than ever, we’ve ended up in a world where it is dominated by a single institution that, far from specializing in this critical role, is a massive agglomeration of roles that have been acreting since the Middle Ages. The university’s monopoly on pre-commercial research is part of a much bigger story that you should care about even if you think research is doing fine . In order to actually unpack it, we need to go back to the 12th century, to understand where the university came from, and how it acquired the massive bundle of roles it has today.

2. Universities have been accumulating roles for hundreds of years

Or, disgustingly abbreviated history of the modern university with a special focus on research and the creation of new knowledge and technology.

Medieval Universities

The first universities emerged in the 12th century to train clergy, who needed to be literate (at least in Latin) and have some idea about theology. Institutions of higher education had existed before, and academia arguably predates Socrates, but we can trace our modern institutions back to these medieval scholastic guilds in places like Paris, Bologna, and Oxford. In a recognizable pattern, the types of people who became professors also were the type of person who also liked trying to figure out how the world worked and arguing about philosophy. Many of the people who became clergy were the second sons of noblemen, who quickly realized that learning to read and a smattering of philosophy was useful. Nobles started sending their other children to universities to learn as well.

So from the start, you have this bundle of vocational training (although very scoped), general skills training, moral instruction, and inquiry into the nature of the universe. The business model was straightforward: donations paid for the original structures, and then students paid instructors directly; there were a small number of endowed positions whose salaries weren’t tied to teaching.

Also from the start, university professors spent a lot of time trying to come up with new ideas about the world; however, ‘natural philosophy’ – what we would now call science – was just a small fraction of that work. “How many angels can dance on the head of a pin?” was as legitimate a research question as “why does light create a rainbow when it goes through a prism?” There was no sense that these questions had any bearing on practical life or should be interesting to anybody besides other philosophers. This thread of “academia = pure ideas about philosophy and the truth of how the world works” remains incredibly strong throughout the university story. The centrality of ideas and philosophical roots created another strong thread that we will see later: the deep importance of who came up with an idea first.

The result of this setup was that professors were incentivized to teach well enough to get paid (unless they had an endowed chair) and create ideas that impress other professors. Academia has always been the game where you gain status by getting attention for new knowledge.

Early Modern Universities

The tight coupling between academia and pure ideas meant that when the idea of empiricism (that is, you should observe things and even do experiments) started to creep into natural philosophy in the 17th century, much of the work that we would now call “scientific research” happened outside of universities. Creating new technology was even further beyond the pale. Maybe less than a quarter of the Royal Society of London were associated with the university when it was first formed.

(Side note: the terms “science” and “scientist” didn’t exist until the 19th century so I will stick with the term “natural philosophy” until then.)

While a lot of natural philosophy was done outside of universities, the people doing natural philosophy still aspired to be academics. They were concerned with philosophy (remember, natural philosophers!) and discovering truths of the universe.

The creation of the Royal society in 1660 and empiricism more broadly also birthed the twin phenomena of:

-

Research becoming expensive: now that research involved more than

just sitting around and thinking, natural philosophers needed funding

beyond just living living expenses. They needed funding to hire

assistants, build experiments, and procure consumable materials.

Materials which, in the case of alchemy, could be quite pricey.

- Researchers justifying their work to wealthy non-technical patrons in order to get that funding. The King was basically a philanthropist and the government fused into one person at this point.

Research papers were invented in the 17th century as well and had several purposes:

-

A way for researchers to know what other researchers were doing.

(Note that these papers were in no way meant for public

consumption).

-

A way for researchers to claim primacy over an idea. (Remember, the

game is to be the first person to come up with a new idea!)

- A way to get researchers to share intermediate results. Without the incentive of the paper, people would keep all their findings to themselves until they could create a massive groundbreaking book. Not infrequently, a researcher would die before he could create this magnum opus.

During the early modern period, while natural philosophy made great leaps and bounds, the university’s role and business model didn’t change much: it was still primarily focused on training clergy and teaching philosophy and the arts to young aristocrats. While some scientific researchers were affiliated with universities (Newton famously held the Lucasian Chair of Mathematics which paid his salary independent of teaching) the majority did not. Isaac Newton became a professor mostly as a way to have his living costs covered while doing relatively little work — he lectured but wasn’t particularly good at it. Professors being in a role that is notionally about teaching but instead spending most of their energy doing research instead and being terrible teachers is a tradition that continues to this day.

19th century

The medieval model of the university continued until the 19th century ushered in dramatic changes in the roles and structure of the university. Universities shifted from being effectively an arm of the church to an arm of the state focused primarily on disseminating knowledge and creating the skilled workforce needed for effective bureaucracies, powerful militaries, and strong economies. The 19th century also saw the invention of the research university -- taking on its distinctly modern role as a center of research.

During the 19th century the vast majority of Europe organized itself into nation-states. Everybody now knew that science (which had clearly become its own thing, separate from philosophy) and arts (which at this point included what we would now call engineering) were clearly coupled to the military and economic fates of these nation-states: from the marine chronometer enabling the British navy to know where it was anywhere in the world, to growing and discovering nitre for creating gunpowder, to the looms and other inventions that drove the beginning of the Industrial Revolution. However, at the beginning of the 19th century, most of this work was not happening at universities!

Universities at the beginning of the 19th century looked almost the same as they did during the medieval and early modern period: relatively small-scale educational institutions focused on vocational training for priests and moral/arts instruction for aristocrats. However, modern nation-states needed a legion of competent administrators 2 to staff their new bureaucracies that did many more jobs than previous states: from mass education programs to fielding modern armies. These administrators needed training. Countries noticed that there was already an institution set up to train people with the skills that administrators and bureaucrats needed. Remember, one of the university’s earliest roles was to train clergy and for a long time the Catholic Church was the biggest bureaucracy in Europe.

Many continental European countries, starting with Prussia and followed closely by France and Italy, began to build new universities and leverage old ones to train the new administrative class. This is really the point where “training the elite” took off as a big part of the university’s portfolio of social roles. Universities and the State became tightly coupled: previously curriculum and doctrine was set by the Church or individual schools — under the new system the state was heavily involved in the internals of universities: in terms of staffing, structure, and purpose.

Technically, not all of these schools were actually “universities.” In the 19th century, universities were only one type of institution of higher education among a whole ecosystem that included polytechnic schools, grandes écoles, and law and other professional schools. Each of these schools operated very differently from the German university model (which, spoiler alert, eventually won out and is what today we would call a university).

With the exception of Prussia, research was a sideshow during the initial shift of universities from largely insulated institutions for training clergy and doing philosophy to cornerstones of the nation-state. Some scientific researchers continued the tradition of using teaching as an income source to sustain their research side-gig, but nobody outside of Prussia saw the role of the university as doing research. From A History of the University in Europe: Volume 3:

Claude Bernard (1813–78) had to make his very important physiological discoveries in a cellar. Louis Pasteur (1822– 95) also carried out most of his experiments on fermentation in two attic rooms.

However, the Prussian system put research front and center for both the humanities and the “arts and sciences” (which is what would become what we call STEM — remember, engineering was considered an “art” until the 20th century). Alexander von Humboldt, the architect of the Prussian university system 3 believed universities should not just be places where established knowledge is transmitted to students, but where new knowledge is created through research. He argued that teaching should be closely linked to research, so students could actively participate in the discovery of new knowledge, rather than passively receiving it.

Prussia’s 1871 defeat of France in the Franco-Prussian war solidified the Prussian higher education system as the one to emulate. Leaders across the world (including France itself!) 4 chalked Prussia’s victory up to their superior education system: Prussian officers were trained to act with far more independence than their French counterparts, which was in part traced back to the Humboldtian research university exposing students to the independent thinking in novel situations that research demands. Superior technology that was downstream of university research was another key component of the Prussian victory — from breech-loading steel cannons enabled by metallurgy research to telegraphs improved by developments in electromagnetism. (From a “where is technology created?” standpoint, these technologies were created at the companies that also manufactured them but built by people who were trained at the new research universities which also advanced scientific understanding of metallurgy well enough that the industrial researchers could apply it.)

Over the next several decades countries and individuals across the

world created new institutions explicitly following the

German/Humboldtian model (the University of Chicago and Johns Hopkins in

the US are prominent examples). Existing institutions from Oxford to MIT

shifted towards the German model as well. That trend would continue into

the 20th century until today we use the term “university” to mean almost

any institution of higher learning.

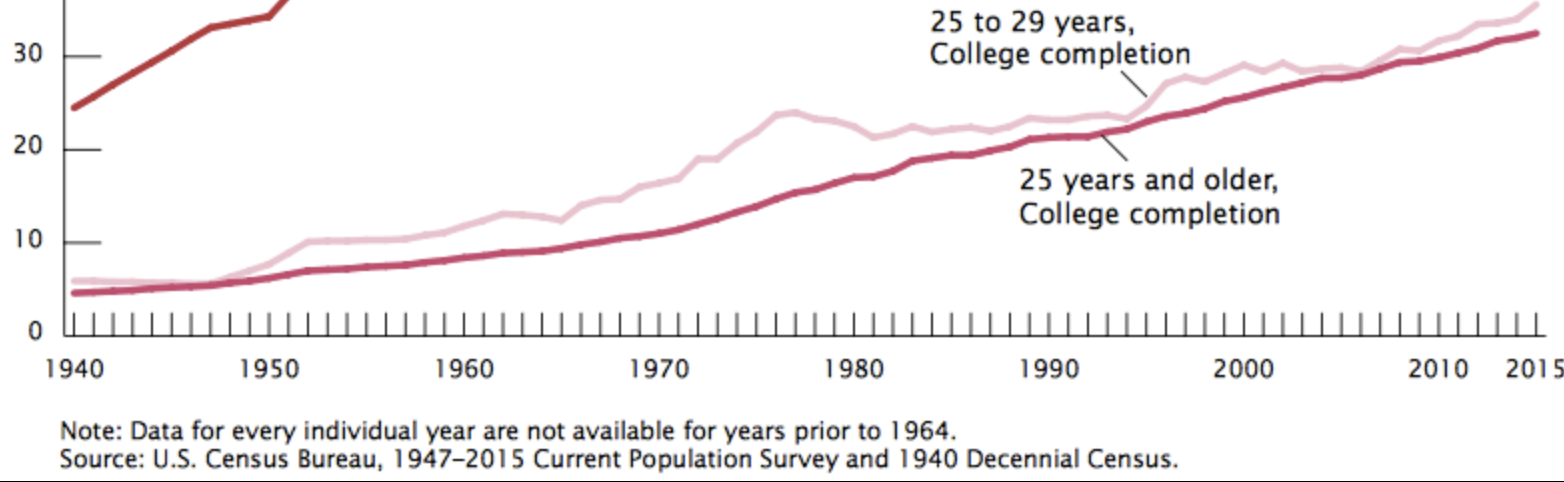

Over the course of the 19th century, the number of people attending universities drastically increased. In 1840, one in 3375 Europeans attended university and in 1900, one in 1410 did. That is a massive increase in enrollment given population changes, but university degrees were still a rarity and largely unnecessary for many jobs.

Similar dynamics were happening on the other side of the Atlantic — in large part following the European trends.

Before the 19th century, American higher education organizations like Harvard, Yale, and Princeton (which was the College of New Jersey until 1896) were focused on training clergy and liberal arts education for the children of the wealthy. None of these American schools were actually “universities” until they started following the German model and granting doctorates in the late 19th century.

American higher education also took on a role in disseminating new technical knowledge to boost the economy. In the middle of the 19th century, local, state, and federal governments in the US realized that new research in agriculture and manufacturing could boost farming and industrial productivity. Congress passed legislation that created the land grant colleges in 1862. These colleges (note — not universities yet!) included many names you would recognize like Cornell, Purdue, UC Berkeley, and more. These schools were explicitly chartered to use science and technology to aid industry and farming in their areas. Almost simultaneously, technical institutes were springing up (MIT 1861, Worcester Polytechnic Institute 1865, Stevens Institute of Technology, 1861). Both the land grant colleges and technical institutes were originally focused on imparting knowledge rather than creating it, but this would quickly change.

Like their European counterparts, the Americans became enamored with the German research university. Johns Hopkins was the first American university created explicitly in the Humboldtian model. It was founded in 1876 — four years after the conclusion of the Franco-Prussian war. The University of Chicago, founded in 1890, followed suit. During the same period, existing colleges added graduate schools and started doing research — Harvard College created a graduate school in 1872 and the College of New Jersey became Princeton University in 1896.

By the end of the 19th century, universities on both sides of the Atlantic had transformed from an essentially medieval institution to a modern institution we might recognize today:

-

Institutions with heavy emphasis on research both in the humanities

and in the sciences.

-

Strong coupling between universities and the state — states used the

universities both to create and transmit knowledge that improved

economic and military capabilities and to train people who would

eventually work for the state in bureaucracies or militaries. In

exchange, most universities were heavily subsidized by states.

- Vocational training was a big part of the university’s role (especially if you look at money flows).

However, there were some notable differences from today:

-

The role of the university’s research with respect to technology was

to figure out underlying principles and train research as inputs to

industrial research done by companies and other organizations.

-

By the end of the 19th century, only one in 1000 people got higher

education, so while University students did have a big effect on

politics, universities were less central to culture.

-

While the Humboldtian research university was starting to dominate,

there was still an ecosystem of different kinds of schools with

different purposes.

-

A lot of professional schooling happened primarily outside of the

university in independent institutions like law schools and medical

schools.

-

While vocational training was a function of the universities,

lawyers and there were only a few jobs that

required

degrees.

- Instead of being society’s primary source of science and technology, a big role of university research was in service of education.

This bundle would last until the middle of the 20th century.

20th Century

The 20th century saw massive shifts in the university’s societal roles. A combination of demographic and cultural trends, government policy, and new technologies led to a rapid agglomeration of many of the roles we now associate with the university: as the dominant form of transitioning to adulthood, a credentialing agency, a think tank, a collection of sports teams, a hedge fund, and more. All of this was overlaid on the structures that had evolved in the 19th century and before.

The role of universities in science and technology shifted as well. At the beginning of the 20th century, universities were primarily educational institutions that acted as a resource for other institutions and the economy — a stock of knowledge and a training ground for technical experts; driven first by WWII and the Cold War but then by new technologies and economic conditions in the 70s, universities took on the role of an economic engine that we expect to produce a flow of valuable technologies and most of our science.

The WWII Discontinuity

To a large extent, the role of the university in the first half of the 20th century was a continuation of the 19th century. World War II and the subsequent Cold War changed all of that.

World War II started a flood of money to university research that continues today. This influx of research dollars drastically shifted the role of the university. Many people fail to appreciate the magnitude of these shifts and the second-order consequences they created.

A consensus emerged at the highest level of leadership on both sides of WWII that new science-based technologies would be critical to winning the war. This attitude was a shift from WWI, where technologies developed during the war were used but ultimately had little effect on the outcome. There was no single driving factor for the change — it was some combination of extrapolating what new technologies could have done in WWI, developments during the 1930s, early German technology-driven success (blitzkrieg and submarine warfare), and leaders like Hitler and Churchill nerding out about new technologies.

Like almost every institution in the United States, universities were part of the total war effort. In order to compete on technology, the USA needed to drastically increase its R&D capabilities. Industrial research orgs like Bell or GE labs shifted their focus to the war; several new organizations spun up to build everything from radar to the atomic bomb. Universities had a huge population of technically trained people that the war production system heavily leveraged as the production centers of technology. It didn’t hurt that the leader of the US Office of Scientific Research and Development, Vannevar Bush, was a former MIT professor — while focusing on applied research and technology wasn’t much of a shift for MIT (remember how there were many university niches), it was a big shift for many more traditionally academic institutions.

This surge in government funding didn’t end with the surrenders of Germany and Japan. The Cold War arguably began even before WWII ended; both sides deeply internalized the lessons exemplified by radar and the Manhattan Project that scientific and technological superiority were critical for military strength. The US government acted on this by pouring money into research, both in the exact and inexact sciences. A lot of that money ended up at universities, which went on hiring and building sprees.

Globally, the American university and research system that emerged from WWII became basically the only game in town: because of the level of funding, the number of European scientists who had immigrated, and the fact that so much of the developed world had been bombed into rubble. As the world rebuilt, the American university system was one of our cultural exports. That is why, despite focusing primarily on American universities in the second half of the 20th century, the conclusions hold true for a lot of the world.

Second-order consequences of WWII and the Cold War

The changes in university funding and their relationship with the state during WWII and the Cold War had many second-order consequences on the role of universities that still haven’t sunk into cultural consciousness:

Top universities are not undergraduate educational institutions. If you’re like most people, 5 you think of “universities” as primarily educational institutions; in particular, institutions of undergraduate education that serve as a last educational stop for professionally-destined late-teens-and-early-twenties before going out into the “real world.” This perspective makes sense: undergraduate education is the main touchpoint with universities that most people have (either themselves or through people they know). It’s also wrong in some important ways.

While many universities are indeed primarily educational organizations, the top universities that dominate the conversation about universities are no longer primarily educational institutions.

Before WWII, research funding was just a fraction of university budgets: tuition, philanthropy, and (in rare cases) industry contracts made up the majority of revenue. 6 Today, major research universities get more money from research grants (much of it from the federal government) than any other source: in 2023, MIT received $608 million in direct research funding, $248M from research overhead, and $415M in tuition; Princeton received $406M in government grants and contracts and $154M in tuition and fees.

These huge pots of money for research and the status that comes with it means that the way that higher education institutions become top universities is by increasing the amount of research that they do. Just look at the list of types of institutions of higher education from the Carnegie Classification System and think honestly which of these seem higher status:

-

Associate’s

Colleges

-

Baccalaureate/Associate’s

Colleges

-

Baccalaureate

Colleges

-

Master’s

Colleges & Universities

-

Doctoral

Universities

-

Special

Focus Two-Year

-

Special

Focus Four-Year

- Tribal Colleges And Universities

Doctoral universities are by far the highest status organizations on the list. Thanks to the Prussians, the thing that distinguishes a doctoral university from other forms of higher education is that it does original research.

The funding and status associated with being a research university exerts a pressure that slowly pushes all forms of higher education to become research universities. That pressure also creates a uniform set of institutional affordances and constraints. It’s like institutional carcinization.

Of course, that Carnegie list also includes many institutions of higher education that are not universities. This fact is worth flagging because it means that when the discourse about the role of universities focuses on undergraduate education, people are making a category error. People often use the Ivy League and other top universities as a stand-in for battles over the state of undergraduate education, but those institutions’ role is not primarily undergraduate education.

Shifts in university research. The paradigm shifts created by WWII and the Cold War also changed how research itself happens.

For the sake of expediency during the war, the government shifted the burden of research contracting and administration from individual labs to centralized offices at universities. This shift did lighten administrative load — it would be absurd for each lab to hire their own grant administrator. However, it also changed the role of the university with respect to researchers: before it was a thin administrative layer across effectively independent operators loosely organized into departments; now the university reaches deep into the affairs of individual labs — from owning patents that labs create to how they pay, hire, and manage graduate students to taking large amounts of grants as overhead.

Federal research funding created multiple goals for university research – both:

-

To train technical experts who were in some ways seen as the front

line of national power in WWII and the Cold War.

- To directly produce the research that would enhance national power.

(Recall that in the 19th and early 20th century, the role of university research was much more the former than the latter.)

This role duality has been encoded in law: many research grants for cutting-edge research are also explicitly “training grants” that earmark the majority of funding for graduate students and postdocs.

I won’t dig too deeply into the downstream consequences here, but they include:

-

A system where much of our cutting-edge science and technology work

is done by trainees. (Imagine if companies had interns build their new

products.)

-

Artificially depressing the cost of research labor.

-

Many labs shifted from being a professor working on their own

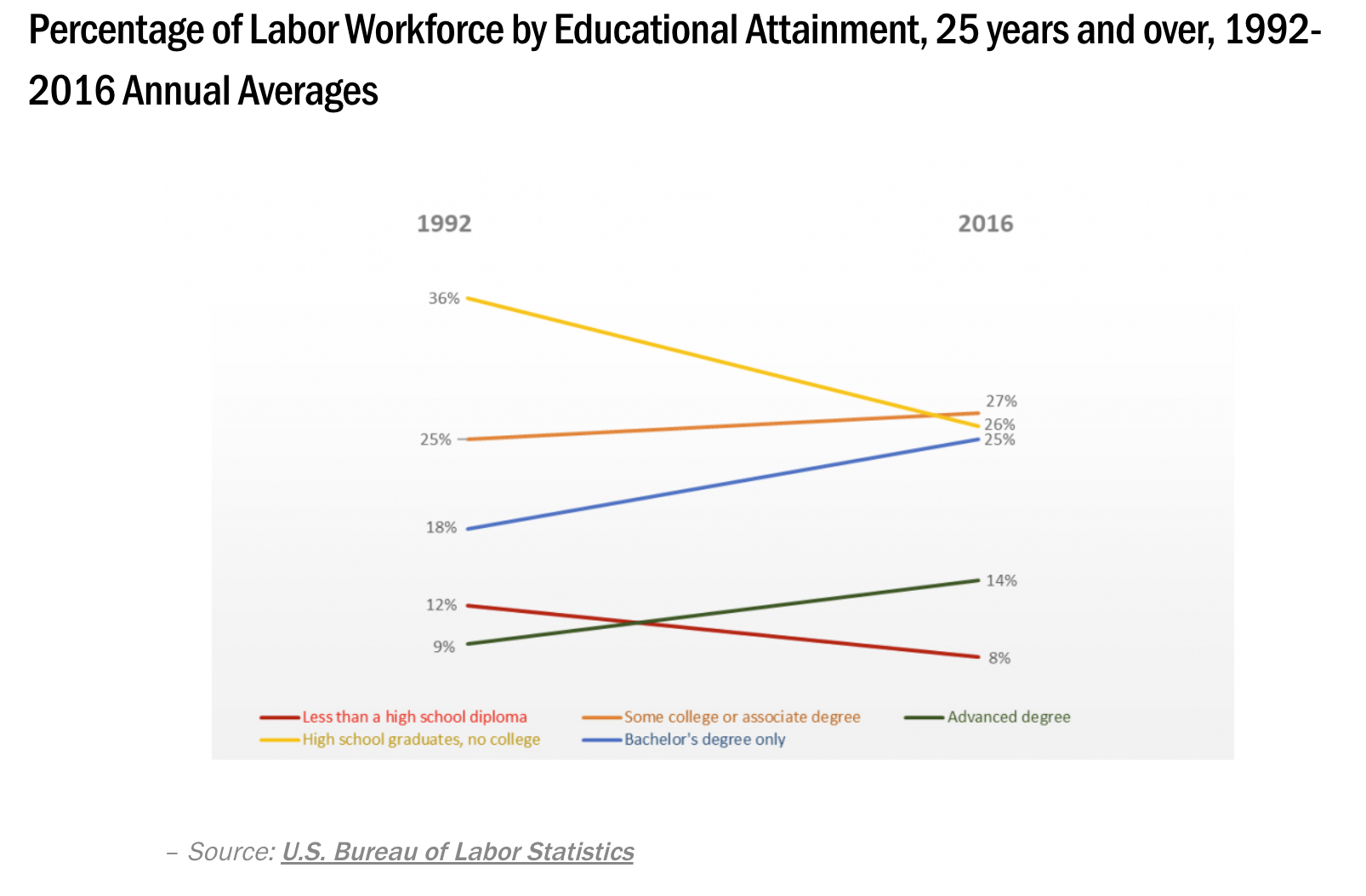

research and giving advice to a few more-or-less independent graduate